As international writers expose themselves to considerable danger to protest injustice, look back on historical repression, or express radical ways of living in the world, the role of the translator to effectively render a story into another language grows ever more crucial.

“Translation is an art, but it’s also a science,” says Max Lawton, who translates Russian dissident writer Vladimir Sorokin. “You have to liberate the language from the source text and create something new.”

PW spoke with Lawton and other translators about how their work shines a light on issues of oppression and on our shared humanity.

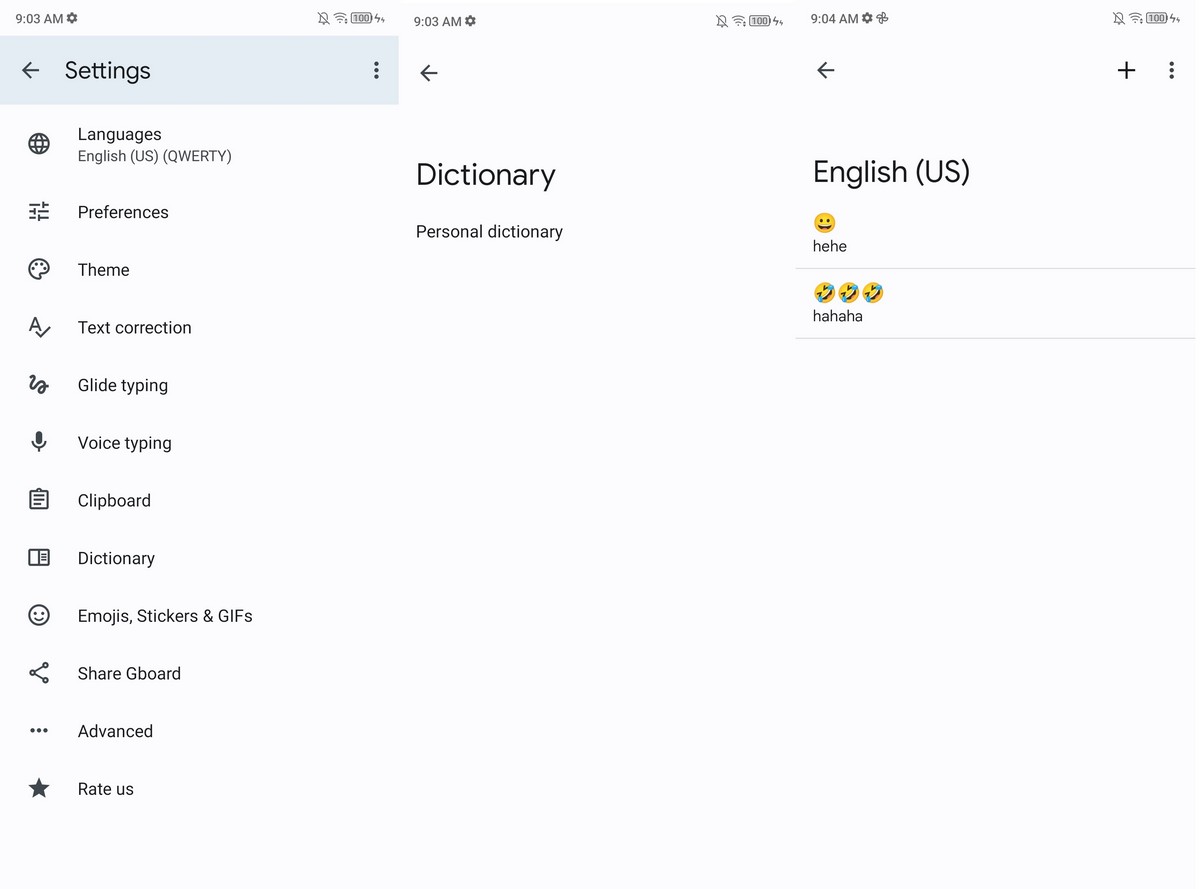

International datelines

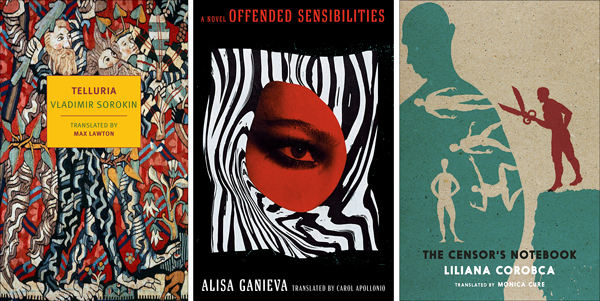

Sorokin’s Telluria (NYRB, Aug.), which PW’s review called “hypnotic and hallucinatory,” takes place in a fractured future of small states and principalities that arise after Russia splinters. The novel, mixing elements of speculative fiction against a feudal backdrop, typifies Sorokin’s defiance of convention and, according to Lawton, also demonstrates the subtlety of Sorokin’s political thought. “Sorokin’s work is political in a nuanced way, not polemical,” Lawton says. “If you’re transparent, the state likes you. They can see right through you. There is this sort of nontransparency in the language that Sorokin uses as an anti-authoritarian tactic. It’s a political choice as well as an aesthetic one.”

Translating Telluria has taken on new meaning for Lawton in the current political climate. “Telluria, on one hand, is a dystopia, but I actually think it’s much more of a utopia,” he says. “I didn’t understand this side of the book until this whole nightmare started in Ukraine, but the idea of Russia crumbling down into tiny nation-states that are all different is a utopian idea from the perspective of Russia’s history of territorial land grabbing.”

Similarly, Carol Apollonio, who translated the Russian political satire cum murder mystery Offended Sensibilities by Alisa Ganieva (Deep Vellum, Nov.), says the war in Ukraine has profoundly affected her translation work. “The world turned upside down February 24,” says Apollonio, who has translated two previous novels by Ganieva. “We’re all reeling in shock and anguish and horror. Alisa has left the country. She can’t stay there.” The novel examines political corruption and censorship in an unnamed provincial town, which Apollonio views as a stand-in for all of Russia. “The lies Alisa exposes in the book are so relevant,” she says.

Apollonio places Ganieva’s novel in a Russian literary tradition of political opposition. “Internal dissidents and thoughtful truth tellers like Alisa need to be respected because they’re putting their lives on the line. That’s an important political message we always get from Russian literature.”

In The Censor’s Notebook (Seven Stories, Oct.), Romanian author Liliana Corobca also probes political repression. Framed around a former censor who donates a stolen notebook from 1974 to a new museum of communism, the novel opens a window onto the secretive world of censorship during that era in Romania. “The story is so multilayered,” says translator Monica Cure. “It’s a kind of hodgepodge of notes the censor has taken, fragments of different novels and poems, all made up by the author but presented as if they were found.” Cure, whose grandfather was imprisoned and who herself was a refugee from the Communist regime in Romania, adds that translating the novel gave her a deeper appreciation for the importance of protecting freedom of expression. “It’s healthy to be able to talk about censorship, which is basically the illegitimate silencing of voices. Legitimacy is what we as a community decide on. What we get to do in a democracy is decide on what voices have been wrongly silenced.”

Writers often pay a huge price for work that criticizes or even questions the state’s authority. Uyghur writer Perhat Tursun’s novel The Backstreets (Columbia Univ., Sept.) follows a Uyghur migrant’s journey to an ethnically Han Chinese majority city. “It’s a story of alienation and a descent into madness,” says translator Darren Byler, an anthropologist who began working in Northwest China in 2011, met Tursun, and started translating the novel. To protect Tursun and his Uyghur cotranslator, Byler held off publishing, but when his cotranslator was disappeared and Tursun was arrested and sentenced to 16 years’ imprisonment as part of China’s mass internment of members of ethnic minorities, Byler decided the time was right. “Translating and finding a publisher for this work was an obligation I felt toward Tursun,” he says. “He had given me a lot. He’d become my friend.”

Byler hopes readers appreciate the book’s wisdom and insight. “It’s conveying something about life for Uyghurs and a Uyghur sensibility, a way of seeing the world that I think speaks to what it means to be human.”

Translator Paul G. Starkey was also moved to bring attention to an underrepresented cultural and literary tradition, with Hammour Ziada’s The Drowning (Interlink, Sept.), a historical novel set in 1968 Sudan. It’s “a vivid portrayal of social relations in a repressive and tightly regulated traditional Sudanese village,” Starkey says. “There is very little Sudanese literature available in English translation, so I saw this as a chance to make a hopefully interesting contribution to what’s available.” In the context of the profound upheavals that have taken place and are currently taking place in the wider Arabic-speaking world, the novel offers readers a portrait of those who live the region. “I hope that it may help to convey some sense of empathy with people in situations and conditions vastly different from our own,” Starkey says.

Love languages

The process of working so closely with a text can transform how a translator identifies with the story. In Concerning My Daughter (Restless, Sept.), by South Korean novelist Kim Hye-Jin, a lesbian activist and her partner begin living with her more conservative mother. Translator Jamie Chang was initially skeptical of the mother-daughter relationship. “I found myself thinking, why are you moving in with your mother and why are you taking your partner with you? This is going to be too much for her,” Chang says. “But that’s the thing about good stories. You put characters with strong opinions, strong feelings, and strong bonds in a pressure cooker and see what happens.” The result is a story of connection that crosses generations. “This is the kind of book you could read with your mother if you’re just coming out,” she adds. “By the time I finished I felt fully convinced it’s possible for a Korean lesbian and their partner to get along with their elderly mom. The solidarity between these women feels so realistic.”

Another novel pushing against convention is Hugs and Cuddles (Two Lines, Oct.), by Brazilian novelist João Gilberto Noll. Edgar Garbelotto, who translated two Noll novels prior to this one, has always been attracted to the ambition of Noll’s vision. “I was totally fascinated by the way he was writing, the language he was using—even thematically by the places he was going,” Garbelotto says. The novel follows a narrator who, inspired by a formative sexual experience in his youth, abandons his former life and sets out on a quest for personal liberation. In the process the narrator rejects his social standing, the constraints of his gender, and the sexual mores that previously inhibited him.

Noll’s liberatory message inspired Garbelotto. “I think one of the main motivations for a translator is your desire to share work that impressed you so much,” he says. “I hope readers can experience through Noll’s incredible prose this adventure of living freely, leaving what is expected from capitalism and society behind. We can live that experience through Noll’s characters. That’s the power of literature.”

Stories from unfamiliar cultural contexts can nonetheless resonate with readers. The Impatient (HarperVia, Sept.), by Cameroonian writer Djaili Amadou Amal, follows three women struggling to free themselves from forced marriage, polygamy, and abuse in a Cameroonian village. The novel’s critique of this atmosphere of sexual control and violence struck translator Emma Ramadan as universally relevant. “Women everywhere have this experience of being forced into situations and faced with misogyny and a toxic patriarchy,” Ramadan says. “We can be both interested in understanding what’s happening in other places and also use this novel as an opportunity to reflect on what’s happening in our communities. That’s the beauty of translation.”

The story of the women also highlighted for Ramadan the importance of finding unity in a common cause. “One of the lessons of the book is that we as women—and really any oppressed group—are stronger when we bind together,” she notes.

The Impatient and other novels discussed here present readers with an opportunity to find common ground with cultures and societies from around the world. “Our role as translators is not just to bring something over but also to allow a conversation to happen here about it,” Ramadan says. The goal of translation, she explains, is to have “a conversation that doesn’t foreignize or other or distance, but that brings this story home and forces us to examine ourselves in the ways we’re examining the characters in a book.”

Matthew Broaddus is a poet and associate poetry editor at Okay Donkey Press.

Read more from our Literature in Translation feature.

Identity Papers: Literature in Translation 2022

These new translated works of fiction challenge questions of identity and what it means to belong.

The Language of the Body: PW Talks with Stephanie McCarter

In her forthcoming translation of Ovid’s 'Metamorphoses' (Penguin Classics, Sept.), classicist McCarter renders the poet’s concern with questions of power, violence, and gender intelligible to a contemporary audience.

A version of this article appeared in the 06/06/2022 issue of Publishers Weekly under the headline: Pushing