Monday, March 7, 2022

Meta's machine translation journey - Analytics India Magazine - Translation

There are around 7000 languages spoken globally, but most translation models focus on English and other popular languages. This excludes a major part of the world from the benefit of having access to content, technologies and other advantages of being online. Tech giants are trying to bridge this gap. Just days back, Meta announced that it plans to bring out a Universal Speech Translator to translate speech from one language to another in real-time. This announcement is not surprising to anyone who follows the company closely. Meta has been devoted to bringing innovations in machine translations for quite some time now.

Let us take a quick look back into the major highlights of its machine translation journey.

2018

Scaling NMT translation

Meta used neural machine translation (NMT) to automatically translate text in posts and comments. NMT models are useful at learning from large-scale monolingual data, and Meta was able to train an NMT model in 32 minutes. This was a drastic reduction in training time from 24 hours.

Open-sourcing LASER

In 2018, Meta also open-sourced the Language-Agnostic SEntence Representations (LASER) toolkit. It works with over 90 languages that are written in 28 different alphabets. LASER computes multilingual sentence embeddings for zero-shot cross-lingual transfer. It works on low-resource languages as well. Meta said that “LASER achieves these results by embedding all languages jointly in a single shared space (rather than having a separate model for each).”

2019

Wav2vec: unsupervised pre-training for speech recognition

Today, accessing various benefits of technology like GPS, virtual assistants essentially need speech recognition technology. But most of them rely on English, and a major chunk of people who do not speak the language or speak it with an accent not recognisable are excluded from using such an easy and important method of accessing information and services. Wav2vec wanted to solve this. Here, unsupervised pre-training for speech recognition was the focus point for Meta. Wav2vec is trained on unlabeled audio data.

Meta adds, “The wav2vec model is trained by predicting speech units for masked parts of speech audio. It learns basic units that are 25ms long to enable learning of high-level contextualised representations.”

Due to this, Meta has been able to build speech recognition systems that perform way better than best semi-supervised methods, though it can have 100 times less labelled training data.

2020

M2M-100: Multilingual machine translation

2020 was an important year for Meta, where it came out with different models that advanced machine translation technology. M2M-100 was one of them. It is a multilingual machine translation (MMT) model that translates between any pair of 100 languages without depending on English as an intermediary. M2M-100 is trained on a total of 2,200 language directions. This model wants to make the quality of translations worldwide better, especially those who speak low-resource languages claimed Meta.

CoVoST: multilingual speech-to-text translation

CoVoST is a multilingual speech-to-text translation corpus from 11 languages into English. What makes it unique is that CoVoST covers over 11,000 speakers and over 60 accents. Meta claims that it is “the first end-to-end many-to-one multilingual model for spoken language translation.”

2021

FLORES 101: low-resource languages

Following M2M-100’s footsteps, in the first half of last year, Meta open-sourced FLORES-101. It is a many-to-many evaluation data set that covers 101 languages globally with a focus on low-resource languages that lack extensive datasets even now. Meta added, “FLORES-101 is the missing piece, the tool that enables researchers to rapidly test and improve upon multilingual translation models like M2M-100.”

2022

Dat2vec

In 2022, Meta released data2vec, calling it “the first high-performance self-supervised algorithm that works for multiple modalities.” It was applied to speech, text and images separately and it outperformed the previous best single-purpose algorithms for computer vision and speech. Data2vec does not rely on contrastive learning or reconstructing the input example.

Sunday, March 6, 2022

Legendary Dating Sim Tokimeki Memorial Finally Gets a Fan Translation - Twinfinite - Translation

Few games are iconic within the dating simulation genre as Tokimeki Memorial by Konami, despite the fact that it was never officially localized in English.

Now, fan translators at Translated Games have finally filled the gap, proving a patch for the SNES version of the game.

The patch can be downloaded here, and it even comes with a few extra goodies.

Released in 1994 for PC Engine CD, the game received quite a few ports over time, and the one we’re looking at here is the SNES port released in 1996, titled Tokimeki Memorial: Densetsu no Ki no Shita de (Under the Legendary Tree).

The series has continued to this day in Japan, even if most of the latest games are for mobile platforms, while only the “Girl’s Side” sub-series has been released on consoles in recent years. Tokimeki Memorial: Girl’s Side 4th Heart has been launched for Nintendo Switch in 2021.

The original game has you play the role of a high-school student who is in love with the inevitable childhood friend Shiori Fujisaki.

Through the most classic dating sim gameplay, you have to organize your day to improve in various fields, while courting Shiori herself or a variety of additional heroines, all the way to the climatic confession at the end of high school.

Friday, March 4, 2022

The Dirtiest Word In The Financial Dictionary - Money and Markets - Dictionary

When discussing the so-called “bad or offensive words” of the financial world, one term stands above all: STAGFLATION!

Were you a fan of George Carlin?

I know I was. I truly believe he was one of the most brilliant social commentators of our time.

Note that I called him a social commentator, not a comedian.

The truth is that while Carlin was funny and was considered a stand-up comedian…I never looked at him as such.

To me, he was an observationist that was able to cut to the truth of our society, and he did it while making people smile and laugh, even when that truth wasn’t pleasant to hear.

Funny? Yes…

But his insight was worth so much more than just “stand-up material,” so to call him a comedian does a great disservice to who he really was.

One of his greatest “bits” was his commentary on the seven words that you can’t say on television. I won’t list them here…but I bet you can guess what some of them are.

Anyway, the segment showed just how ridiculous it is to have words that we can’t say because they’re offensive because being offended is entirely subjective.

Subjectivity creates a slippery slope. We don’t know where the line is drawn…or even who gets to draw that line.

The Dirtiest Financial Word

However, in the financial world, we’re a bit luckier. The words that “offend” are almost universal.

To list a few: foreclosure, depression, insider trading, recession, inflation, short-seller…

These kinds of terms get our hackles up–some more than others–but even so, there are certain people who don’t mind these terms, which brings us back to the subjective portion of this conversation.

However, there is one term that is all-but-guaranteed to ruffle feathers across the board.

That disgusting term is Stagflation.

Did you just shudder at the mere mention of the word? Do you feel like you need a shower? Can’t say I blame you…

Stagflation is the most disgusting word in the financial dictionary because it represents the non-movement of our economy.

Say what you want about the other terms, but at least there’s movement involved. We know what to expect and what to do…

When the word “stagflation” gets thrown into the mix, we’re stuck in limbo.

Now, why are we even talking about stagflation?

Well, because there are more than a few analysts talking about it in the financial news world right now. The war in Ukraine could easily exacerbate the global inflation that has been driving up prices on everything from food and gas to flights and hotels.

While a recession could be possible, we’re not even in a bear market yet, so that’s a ways down the road.

But stagflation? That could happen… easily.

What is stagflation?

Well, most consider “stagflation” an economic period of high prices coupled with little to no growth in the economy. It’s a pretty simple definition, though, it’s hard to pinpoint what the “high” and “low” are because, again, that stuff can be subjective.

However, what’s important to understand is that historically, most of our economic recoveries ended with stagflation before turning into a recession–and seeing how we’ve had one of the longest recoveries to date since the crash of 2008, we may be overdue.

Battling Stagflation: Where To Look

So, if stagflation is on the table, what do we as investors do to combat it?

Well, we start by finding financial havens that tend to grow during weird financial times.

When it comes to combating stagflation, you want to find stocks that have STRONG earnings ability with a healthy cash flow. That means looking in the financials, materials, energy, consumer services, and healthcare sectors for those quicker returns.

Or, if you think that stagflation is going to hover over our heads for a while, then you may want to find some longer-term prospects in domestic small-caps or even some emerging markets plays–save for ones in China, of course. You may want to avoid investing there.

However, if stagflation is HERE, there’s really only one true kryptonite to bring it down, and that’s commodities.

Metals, oil, natural gas–these are the safest places to be during a stagflationary period. Knowing all of this BEFORE it’s here will go a long way towards making sure we’re as prepared as possible.

As much as I hate talking so negatively lately, the fact of the matter is that I don’t have a choice. My job isn’t to pull the wool over your eyes; it’s to show you all of the possibilities laid out before us, good or bad.

I’d rather you know than be caught with your pants down.

I believe that information defeats fear of the unknown…

And if you’re not afraid, you can act accordingly to profit as much as possible.

“The oldest and strongest emotion of mankind is fear, and the oldest and strongest kind of fear is fear of the unknown” – H.P. Lovecraft

Thursday, March 3, 2022

Baidu Launches AI Platform to Enable on-Device, Real-Time Translation from Speech to Hand Gestures - Yahoo Finance - Translation

BEIJING, March 3, 2022 /PRNewswire/ -- Baidu AI Cloud, a leading AI cloud provider, launched an AI sign language platform able to generate digital avatars for sign language translation and live interpretation within minutes. Released as a new offering of Baidu AI Cloud's digital avatar platform XiLing, this platform aims to help break down communication barriers for the deaf and hard-of-hearing (DHH) community by boosting the accessibility of automated sign language translation. An AI sign language interpreter developed using the platform will perform its duties during the upcoming Beijing 2022 Winter Paralympics Games.

Also released along with the platform are two all-in-one AI sign language translators, providing one-stop solutions with a streamlined set-up process and plug-and-use features. By enabling public service deployment in scale, the translators have been designed for a wide range of use scenarios such as hospitals, banks, airports, bus stations and other public areas.

With the technology enablement brought by AI, the production and operational costs of digital avatars have been reduced to a significant degree, making it possible for AI sign language to go scale and serve more deaf and hard-of-hearing individuals, said Tian Wu, Baidu Corporate Vice President.

Today, China is home to 27.8 million deaf and hard-of-hearing (DHH) individuals but is faced with a massive shortage of qualified professionals to serve their needs, with no more than 10,000 sign language translators, a gap especially felt in medical and legal settings.

The XiLing AI sign language platform and the all-in-one sign language translators are designed to fill this significant gap and address the communication difficulties facing the DHH community in both online and offline settings. For DHH individuals who want to study or socialize online without barriers, the platform can be quickly integrated into commonly used mobile applications, websites, and mini programs within a few hours, performing functions like sign language video synthesis and livestream synthesis, text-to-sign language translation, and audio-to-sign language translations.

The all-in-one translators are tailored for offline scenarios to improve the accessibility of public services. Baidu's translators come with two models, a full offline version V3, and a cloud-connected version P3. Both are embedded with core functions of the AI sign-language platform, able to realize ASR speech recognition, speech translation, and portrait rendering. This full range of functions offers incredible potential for empowering the DHH. For instance, DHH individuals will be able to visit the hospital and manage the complicated process of registration, consultation, payment, and medicine collection without further assistance. Additional applications hold the potential to allow the DHH community to travel, dine, and even work independently.

Technical Deep Dive

Compared to translations between spoken languages, the sign language translation is more complicated mainly because it is not translated word by word from verbal speech. Instead, the language refinement and word order must be adjusted in order to show the actual meaning of the sentence. As a relatively rarely-used language, a very limited amount of data on sign language is available for machine learning. It also requires lip language and facial expressions to assist understanding. In real-world settings, solutions are often faced with complex environmental factors making them difficult to deploy. All these practical barriers have posed numerous challenges to the development of AI sign language.

To make AI sign language comprehendible, Baidu scientists had to resolve three key challenges: the clarity of speech recognition, the accuracy of sign language translation, and the fluency of sign language movements.

To address speech recognition clarity, the XiLing AI sign language platform uses Baidu's home-grown SMLTA speech recognition model to achieve end-to-end modeling speech recognition through integrating acoustics and language. Based on Baidu's self-developed deep learning algorithm, targeted training can enable word accuracy in a wide range of fields such as tourism, medical care, and legal proceedings.

In terms of the accuracy and refinement of sign language translation, Baidu has built the first neural network-based sign language translation model with a controllable degree of refinement, which can automatically learn sign language translation knowledge from real data such as word order adjustment, word mapping and length control to generate natural sign language that conforms to the habits of hard-of-hearing people.

To ensure the accuracy of the sign language translation, Baidu has invited over 500 scholars and students with hearing loss in China to help enlarge and vet the sign language corpus, with many joining the project as volunteers. Tiantian Yuan, associate dean of Technical College for the Deaf, Tianjin University of Technology, said she and her students feel incredibly honored to have contributed their parts in collaborating with Baidu to fill in this gap for the community.

To ensure the fluency of sign language actions, the AI sign language platform has sorted nearly 11,000 actions based on the National Universal Sign Language Dictionary with its "action fusion algorithm", so that all digital sign language gestures have the degree of coherency and expression as human sign language. In addition, with the help of 4D scanning technology, the accuracy of mouth shape generation has been optimized up to 98.5%.

About Baidu:

Founded in 2000, Baidu's mission is to make the complicated world simpler through technology. Baidu is a leading AI company with strong Internet foundation, trading on the NASDAQ under "BIDU" and HKEX under "9888." One Baidu ADS represents eight Class A ordinary shares.

Media Contact

intlcomm@baidu.com

View original content to download multimedia:https://ift.tt/rAZ5zpq

SOURCE Baidu, Inc.

How to Use Your Smartphone's Translator Apps - AARP - Translation

If you're feeling the urge to travel abroad, but it has been decades since you took high school Spanish, French or some other language, you probably could use some help.

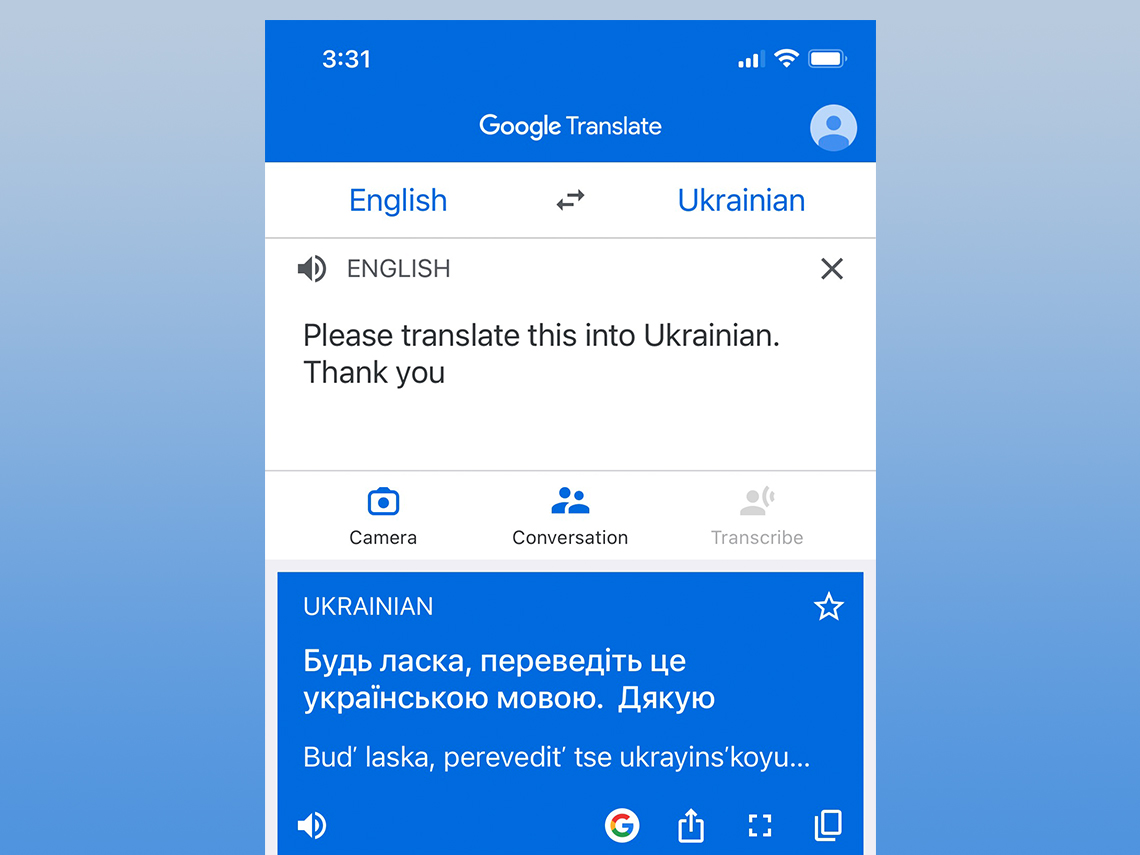

Whether you’re reading a foreign menu, asking for directions, listening to a lecture or trying to converse with a native, that help is as close as your smartphone. The Google Translate app has been available for Android devices and the iPhone for about a dozen years now. But if you have an iPhone, you might also open the Apple Translate app that Apple unveiled in 2020; it’s baked into iPhones running iOS 14 or later.

Both are free. While neither delivers perfect translations every time, they are increasingly powerful apps that under the right circumstances are magnifique.

Google translates 108 languages

Google Translate lets you type and translate between 108 languages, or 59 even when you lack internet connectivity. The app can also translate text found in images across 94 languages, translate conversations in near real time in 71 languages, and recognize drawn text characters in 96. You can also create a phrasebook on your device.

What’s more: You'll not only see translations on the phone display but can hear pronunciations of the words and phrases.

The Google Translate interface differs on the Android and iOS versions of the app, but the basic functionality is the same. Start by choosing the languages you want to translate from and to. If your chosen language is supported, download the file onto your phone. That way you can use the translation features even when you’re offline.

AARP Membership Flash Sale- $9 per year when you sign up for a 5-year term

Join today and save 43% off the standard annual rate. Get instant access to discounts, programs, services, and the information you need to benefit every area of your life.

You have three main ways to use Google Translate:

- Select Camera to translate the text it sees.

- Go with Conversation mode to have a bilingual chat with a foreign speaker.

- Choose Transcribe to translate words and phrases you either utter aloud or type.

On both iPhones and Androids you can enter text in the spaces provided inside the app or tap a microphone icon to speak or pick up someone else’s voice.

On an iPhone, I tapped Transcribe and then the microphone icon to translate recent comments from Ukraine President Volodymyr Zelensky from Ukrainian to English.

Translate a conversation

When you tap Conversation, two people can speak in turn, each by pressing a microphone button and watching words appear in both languages. While in this mode, you can also tap a Both button on the iPhone or an Auto button on Android to have the phone listen for both languages. This can be handy not only while traveling, but also if a loved one, friend or someone you've hired is more comfortable speaking in their native language, but you're not fluent.

On the iPhone if you tap Camera inside Google Translate, recognized text in the original language is overwritten by the app. You can also snap a picture and select the words you want to translate. Incidentally, in Camera mode on the iPhone, the Google Translate name changes to Google Lens, a feature within the broader Google app.

On Android if you tap Camera, you can tap an Instant button to immediately translate whatever words show up on the screen. Or you can tap Scan to have the phone scan and capture whatever is in front of the camera. Then drag your finger to highlight specific text.

Another option lets you tap Import to translate text from a photo already in your library.

Google keeps transcriptions

Google points out that any audio you record for translation purposes will be transmitted to the company for processing. And to help improve the service, Google may keep a transcription of a conversation for “a limited period of time.” Google also wants you to make sure you have consent from the people around you before you translate their words.

You’ll also need to make sure in device settings that you give Google Translate permission to access the microphone on your phone. On Android, open Settings, and tap Privacy. Turn on microphone access. On iOS, open Settings, tap Privacy and then Microphone. Enable the button next to Google Translate to turn on microphone access.

Within the settings of the Google Translate app itself, you can choose to block offensive words or change pronunciation speeds, though any of these speed settings do not apply in Conversation mode.

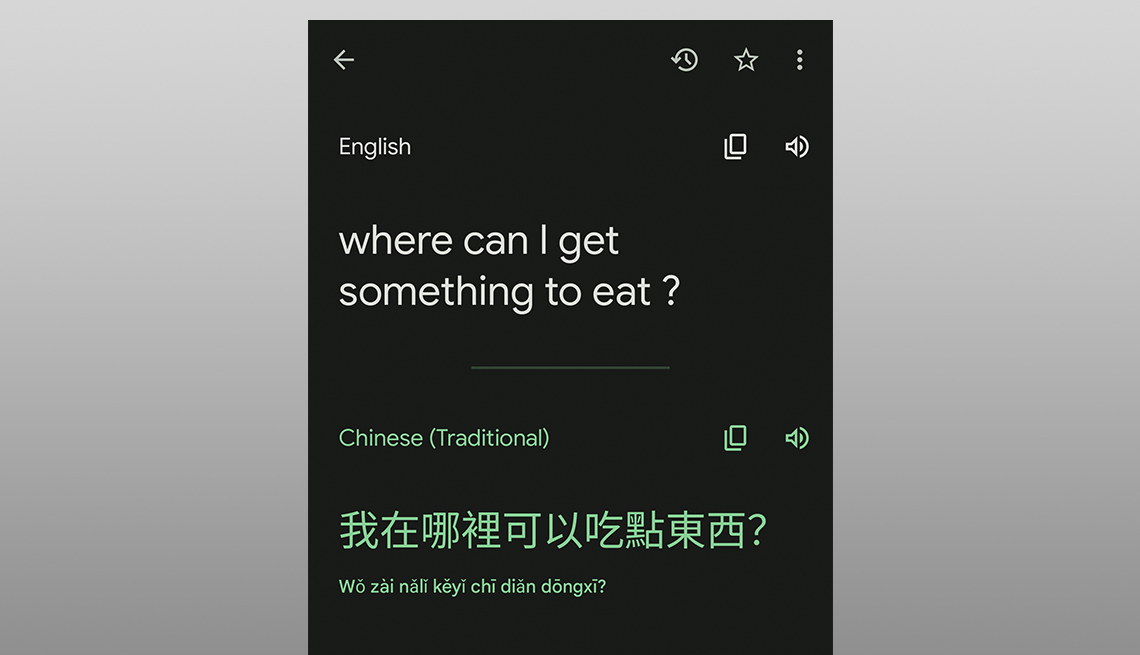

Apple translates 13 languages

The Apple Translate app supports far fewer languages than Google does, just 13 in total, including United States and United Kingdom versions of English and Chinese mainland and Taiwanese versions of Mandarin Chinese. All 13 can be downloaded for offline use.

As with Google, the audio and text you choose to have translated are sent to Apple’s servers for processing. Among Apple’s caveats: “Translate should not be relied on in circumstances where you could be harmed or injured, in high-risk situations, for navigation, or for the diagnosis or treatment of any medical condition.”

Otherwise, the basic functionality is like Google’s — after choosing your languages, you can type text or tap a microphone to speak. And Apple also features a Conversation mode. You can display the interface as side-by-side or face-to-face, where two people directly opposite one another will each see spoken dialog right side up.

Live Text lives in many iPhones' cameras

On an iPhone, you can also translate signs and other text seen through the camera lens. This isn’t done directly through the Apple Translate app but rather the Live Text feature added with iOS 15. The feature is compatible with all iPhones dating to 2018’s Xr or Xs models.

If you have a supported model, launch the Camera app, and point it at what you want to translate. A yellow frame appears around detected text. Tap the circled icon with horizontal lines inside open brackets. It’s toward the bottom on the right when you hold the phone in vertical portrait mode or upper right when you hold it sideways horizontally. It will turn yellow. Swipe or tap to select the appropriate text and tap Translate, which you may have to swipe to get to.

Translation apps are extremely useful, but some of the time anyway, you can use your phone even more easily when you want to know how to say something in another language. Just ask Google Assistant on Android phones or Siri on an iPhone. They can tell you in French, say, that c’est la fin de l’article.

Edward C. Baig is a contributing writer who covers technology and other consumer topics. He previously worked for USA Today, BusinessWeek, U.S. News & World Report and Fortune and is the author of Macs for Dummies and the coauthor of iPhone for Dummies and iPad for Dummies.

More in Personal Tech, Travel

- The new high-tech airport experience

- Tips to make sure you don't miss that perfect photo

- These apps, podcasts help you learn new languages

Company will help district with translation and interpretation of languages - Lehigh Acres Citizen - Translation

The Lee County School District is negotiating a contract with a company that will help translate and interpret 240 languages and dialects, which will further help the district communicate with families.

Student Enrollment Director Soretta Ralph said they have a bilingual staff within the student enrollment department, which is available to assist families.

“Oftentimes there are parents that come in that need assistance. We oftentimes don’t know what language they are going to come in speaking,” she said, adding that often it includes different dialects as well. “That dialect is sometimes another language.”

In order to communicate, Ralph said they often rely on Google Translate, as well as multiple staff members, to assist the family. Even when they get FOCUS up and running for student enrollment, it is still going to take a village to assist families.

Google Translate again is used when families contact them through the student enrollment email, so they can respond in the appropriate language.

ESOL Director Dr. Evelyn Rivera said they have a plan in place, as they are contracting with the company, Language Line Solutions, which provides translations and interpretations for the spoken and written language.

“The contract is going back and forth, fixing things on their end and our end,” she said.

When the contract is finalized, school and district staff will have support for 240 languages and dialects.

“It is going to be a wonderful support for everybody in here,” Rivera said.

There will be a special code to call on the phone and within 20 seconds someone will be there to speak the language, or dialect of the parent, she explained.

Ralph said the district is still growing, as in January alone they enrolled 1,200 new students. She said 160 of those students came to the district with IEPs.

The 2022-2023 application period began on Jan. 17 for enrollment. Ralph said 12,000 students have entered their applications for next school year.

“They have already been processed and this is the last week,” Ralph said last Tuesday afternoon. “We are getting 1,200 students in a month new to the school year. We have the same number of staff and we continue to grow. The school district is continuing to grow. We are really struggling as a district in every place.”

The School Board approved the English Language Learners Plan for school years 2022-2025.