The National Association of Realtors‘ latest volley in its case against the U.S. Department of Justice includes images from Merriam-Webster’s dictionary that define the words “close” and “open” — a stark example of how far apart the two sides are as they battle over whether the DOJ can investigate two of NAR’s rules.

On Friday, the 1.5 million-member trade group filed a response to the DOJ’s opposition last month to a petition from NAR to quash or modify the federal agency’s civil investigative demand seeking new information on rules regarding buyer broker commissions and pocket listings.

The thrust of NAR’s argument is that the DOJ agreed — as part of a settlement agreement the agency abruptly withdrew from on July 1 — to close investigations into two rules: NAR’s Clear Cooperation Policy, which requires listing brokers to submit a listing to their MLS within one business day of marketing a property to the public, and NAR’s Participation Rule, which requires listing brokers to offer commissions to buyer brokers in order to participate in Realtor-affiliated multiple listing services.

NAR contends that the DOJ did not actually close the investigations and the civil investigative demand the agency sent the trade group on July 6 continued those probes, which makes that demand “invalid.”

“In October 2020, the Antitrust Division unconditionally accepted NAR’s settlement offer, which required a commitment from the Antitrust Division to ‘close’ its investigation of the Participation Rule and Clear Cooperation Policy,” NAR’s attorneys wrote in the filing.

“In this context, the word ‘close’ is a verb that means ‘to bring to an end.’ That term must be construed according to its ‘ordinary meaning,’ and the Antitrust Division’s position, that it was free to ‘open’ that same investigation at any time, contradicts the clear meaning of the parties’ agreement.

“By its plain meaning, the verb ‘open’ means ‘to move (as a door) from a closed position’ or ‘to begin a course or activity.’ Thus, what the Antitrust Division has done is the exact opposite of what the word ‘close’ contemplates in the parties’ agreement.”

NAR’s response is 18 pages long with 547 pages of exhibits, including photographs of the dictionary entries for “close” and “open” starting on page 510.

‘Close’ means ‘close’

The DOJ’s public statements when it withdrew from the proposed settlement saying the agency was concerned the agreement would prevent its ability to protect real estate brokerage competition and would prevent it from pursuing other antitrust claims relating to NAR’s rules “show that it had agreed to close its investigation of the Participation Rule and Clear Cooperation Policy, and that it could not have open investigations concerning either rule,” NAR asserts in its filing.

“The Antitrust Division’s actions also confirm that it understood ‘close’ means ‘close,'” NAR’s attorneys wrote.

“Before trying to withdraw from the settlement, the Antitrust Division asked NAR to modify the settlement agreement to allow it to investigate the Participation Rule and Clear Cooperation Policy.

“The fact that the Antitrust Division sought a modification of the settlement agreement is a concession that the settlement, unless modified, does in fact place limits on the Antitrust Division’s ability to investigate NAR’s Participation Rule and Clear Cooperation Policy.

“The words and actions of the Antitrust Division therefore refute its claim that the settlement agreement imposes no limitation on further investigation of the Participation Rule and Clear Cooperation Policy.”

In a letter sent the same day as the settlement agreement was proposed in court, the DOJ informed NAR it had closed its investigations of the two policies. In that letter, the DOJ included a sentence that said, “No inference should be drawn, however, from the Division’s decision to close its investigation into these rules, policies or practices not addressed by the consent decree.”

According to NAR, that sentence does not mean that the DOJ reserved some right to future investigation of the policies because the DOJ did not ask for and the parties did not negotiate that term.

“Instead, it unconditionally accepted NAR’s settlement proposal, which did not include such a limitation, and that means that there was no such reservation in the settlement agreement,” the filing said.

“[T]hat sentence did not, and cannot, change the plain meaning of the settlement agreement. It only cautions third parties that they should not draw an inference from the Antitrust Division’s decision to close the investigation, which is non-controversial,” the filing added.

Because NAR did not negotiate the language of the closing letter or even see it before it was sent, then “the second sentence of the letter cannot be considered part of the agreement if it takes on the meaning proposed by the Antitrust Division,” NAR’s attorneys wrote.

“A party cannot introduce a new, material term to a contract after an agreement is reached simply because it no longer likes part of the deal it struck.”

What the filing does not address

NAR’s filing makes clear that the trade group considers the settlement agreement binding on the government and therefore its arguments to set aside the latest probe hinge on the court enforcing the deal.

But in its own, previous filing, the DOJ argued that the deal was not final and therefore the agency could withdraw from it.

“In 2020, the United States and NAR discussed, and the United States eventually filed, a proposed settlement that would have culminated in entry of a consent judgment by the Court,” the DOJ’s filing said.

“But no consent judgment was ever entered.”

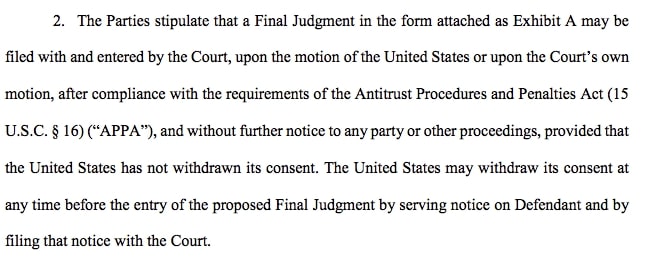

The Antitrust Procedures and Penalties Act, known as the Tunney Act, required a public notice and comment period before any final settlement with NAR, and it was during this process that the DOJ’s Antitrust Division concluded that the reservation of rights provision in the proposed final judgment “should be revised to avoid potential confusion about whether the judgment would foreclose further action by the Division on matters not covered by the judgment,” the DOJ’s attorneys wrote.

When NAR did not agree to the modification, the agency withdrew from the deal as permitted by paragraph 2 of the proposed settlement, they added.

Screenshot from proposed NAR-DOJ settlement

NAR’s latest filing does not address this particular argument from the DOJ.

The DOJ acknowledged that it did agree to issue a closing letter confirming to NAR that it had closed an investigation of two of NAR’s policies. However, the agency’s filing indicates that it considers its latest demand a new investigation and not a continuation of the previous one, as NAR asserts.

“The three-sentence closing letter contained no commitment to refrain from future investigations of NAR or its practices or from issuing new CIDs in conjunction with such investigations,” the DOJ filing said.

‘NAR is moving forward’

This week, at its Realtors Conference & Expo, NAR is considering three MLS policy proposals inspired by the contested settlement with the DOJ: a rule preventing buyer agents from touting their services as “free”; a ban on filtering listings by commission or brokerage name; and a policy requiring MLSs to display buyer broker commissions on their listing sites and in the data feeds they provide to agents and brokers.

Mantill Williams

“The Department of Justice’s (DOJ) withdrawal from a fully binding and executed agreement goes against public policy standards and consumer interests,” NAR spokesperson Mantill Williams said in an emailed statement.

“NAR is moving forward on the pro-consumer measures in the agreement and remains committed to regularly reviewing and updating our policies for local broker marketplaces in order to continue to advance efficient, equitable and transparent practices for the benefit of consumers.

“Allowing the DOJ to backtrack on our binding agreement would not only undermine public confidence that the government will keep its word, but also undercut the pro-consumer changes advanced in the agreement. NAR is living up to its commitments to consumers — we simply expect the DOJ to do the same.”

Read NAR’s latest filing:

Email Andrea V. Brambila.

Like me on Facebook | Follow me on Twitter

Adblock test (Why?)